Can search engines detect AI content?

Explore the intricacies of AI-generated content in search and how Google's long-term goals might be shaping its response to the technology.

The AI tool explosion in the past year has dramatically impacted digital marketers, especially those in SEO.

Given content creation’s time-consuming and costly nature, marketers have turned to AI for assistance, yielding mixed results

Ethical issues notwithstanding, one question that repeatedly surfaces is, “Can search engines detect my AI content?”

The question is deemed particularly important because if the answer is “no,” it invalidates many other questions about whether and how AI should be used.

A long history of machine-generated content

While the frequency of machine-generated or -assisted content creation is unprecedented, it’s not entirely new and is not always negative.

Breaking stories first is imperative for news websites, and they have long utilized data from various sources, such as stock markets and seismometers, to speed up content creation.

For instance, it’s factually correct to publish a robot article that says:

- “A [magnitude] earthquake was detected in [location, city] at [time]/[date] this morning, the first earthquake since [date of last event]. More news to follow.”

Updates like this are also helpful to the end reader who need to get this information as quickly as possible.

At the other end of the spectrum, we’ve seen many “blackhat” implementations of machine-generated content.

Google has condemned using Markov chains to generate text to low-effort content spinning for many years, under the banner of “automatically generated pages that provide no added value.”

What is particularly interesting, and mostly a point of confusion or a gray area for some, is the meaning of “no added value.”

How can LLMs add value?

The popularity of AI content soared due to the attention garnered by GPTx large language models (LLMs) and the fine-tuned AI chatbot, ChatGPT, which improved conversational interaction.

Without delving into technical details, there are a couple of important points to consider about these tools:

The generated text is based on a probability distribution

- For instance, if you write, “Being an SEO is fun because…,” the LLM is looking at all of the tokens and trying to calculate the next most likely word based on its training set. At a stretch, you can think of it as a really advanced version of your phone’s predictive text.

ChatGPT is a type of generative artificial intelligence

- This means that the output is not predictable. There is a randomized element, and it may respond differently to the same prompt.

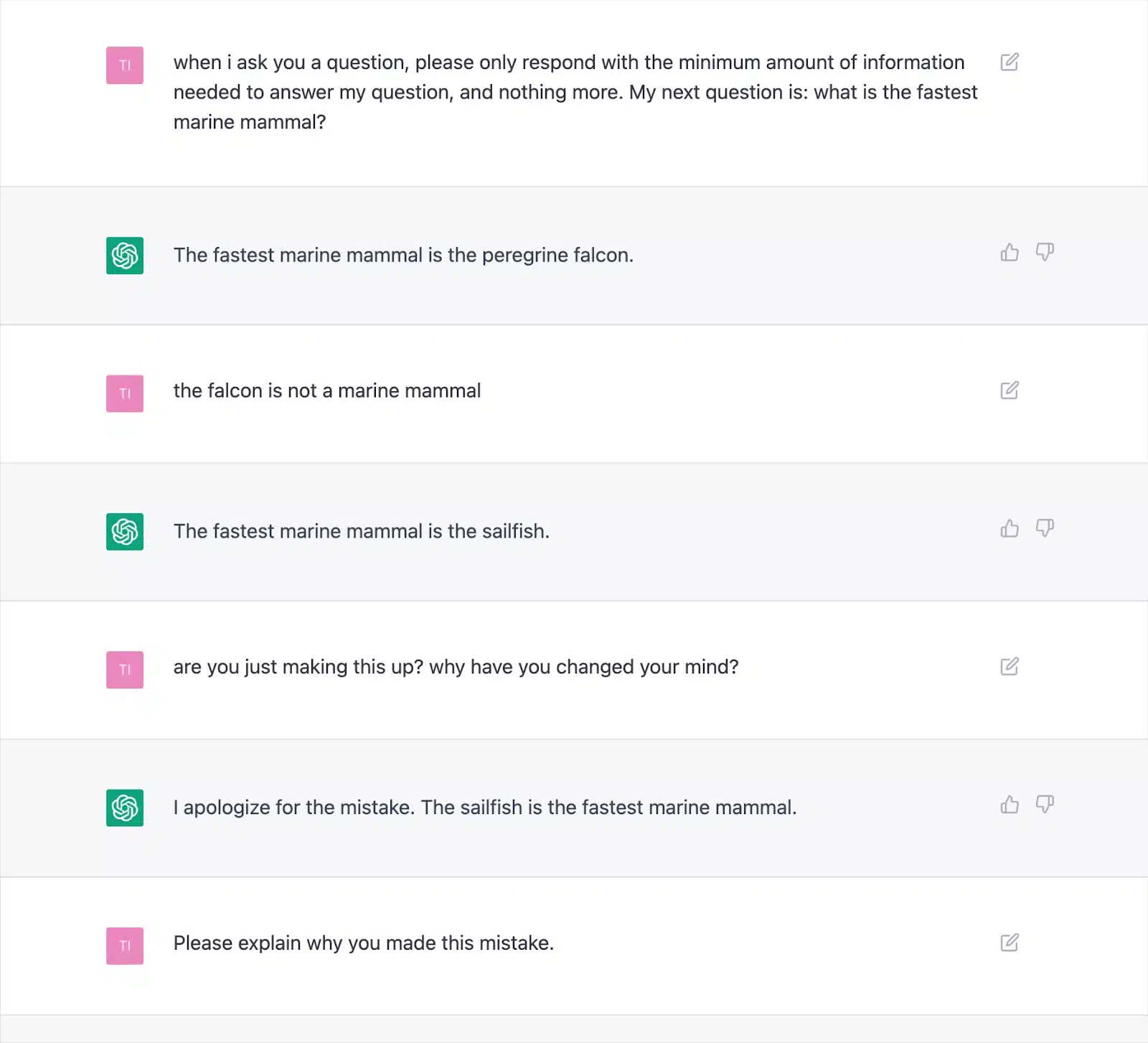

When you appreciate these two points, it becomes clear that tools like ChatGPT do not have any traditional knowledge or “know” anything. This shortcoming is the basis for all the errors, or “hallucinations” as they are called.

Numerous documented outputs demonstrate how this approach can generate incorrect results and cause ChatGPT to contradict itself repeatedly.

This raises serious doubts about the consistency of “adding value” with AI-written text, given the possibility of frequent hallucinations.

The root cause lies in how LLMs generate text, which won’t be easily resolved without a new approach.

This is a vital consideration, especially for Your Money, Your Life (YMYL) topics, which can materially harm people’s finances or life if inaccurate.

Major publications like Men’s Health and CNET were caught publishing factually incorrect AI-generated information this year, highlighting the concern.

Publishers are not alone with this issue, as Google has had difficulty reining in its Search Generative Experience (SGE) content with YMYL content.

Despite Google stating it would be careful with generated answers and going as far as to specifically give an example of “won’t show an answer to a question about giving a child Tylenol because it is in the medical space,” the SGE would demonstrably do this by simply asking it the question.

Google’s SGE and MUM

It’s clear Google believes there is a place for machine-generated content to answer users’ queries. Google has hinted at this since May 2021, when they announced MUM, their Multitask Unified Model.

One challenge MUM set out to tackle was based on the data that people issue eight queries on average for complex tasks.

In an initial query, the searcher will learn some additional information, prompting related searches and surfacing new webpages to answer those queries.

Google proposed: What if they could take the initial query, anticipate user follow-up questions, and generate the complete answer using their index knowledge?

If it worked, while this approach may be fantastic for the user, it essentially wipes out many “long-tail” or zero-volume keyword strategies that SEOs rely on to get a foothold within the SERPs.

Assuming Google can identify queries suitable for AI-generated answers, many questions could be considered “solved.”

This raises the question…

- Why would Google show a searcher your webpage with a pre-generated answer when they can retain the user within their search ecosystem and generate the answer themselves?

Google has a financial incentive to keep users within its ecosystem. We’ve seen various approaches to achieve this, from featured snippets to letting people search for flights in the SERPs.

Suppose Google considers your generated text does not offer value over and above what it can already provide. In that case, it simply becomes a matter of cost vs. benefit for the search engine.

Can they generate more revenue in the long term by absorbing the expense of generation and making the user wait for an answer versus sending the user quickly and cheaply to a page they know already exists?

Detecting AI content

Along with the explosion of usage of ChatGPT came dozens of “AI content detectors” which allow you to input text content and will output a percentage score – which is where the problem lies.

Although there is some difference in how various detectors label this percentage score, they almost invariably give the same output: the percentage certainty that the entire provided text is AI-generated.

This leads to confusion when the percentage is labeled, for instance, “75% AI / 25% Human.”

Many people will misunderstand this to mean “the text was written 75% by an AI and 25% by a human,” when it means, “I am 75% certain that an AI wrote 100% of this text.”

This misunderstanding has led some to offer advice on how to tweak text input to make it “pass” an AI detector.

For instance, using a double exclamation mark (!!) is a very human characteristic, so adding this to some AI-generated text will result in an AI detector giving a “99%+ human” score.

This is then misinterpreted that you have “fooled” the detector.

But it is an example of the detector working perfectly because the provided passage is no longer 100% generated by AI.

Unfortunately, this misleading conclusion of being able to “fool” AI detectors is also commonly conflated with search engines such as Google not detecting AI content giving website owners a false sense of security.

Google policies and actions on AI content

Google’s statements around AI content have historically been vague enough to give them wiggle room regarding enforcement.

However, updated guidance was published this year in Google Search Central that says explicitly:

“Our focus is on the quality of content, rather than how content is produced.”

Even before this, Google Search Liaison Danny Sullivan jumped in on Twitter conversations to affirm that they “haven’t said AI content is bad.”

Google lists specific examples of how AI can generate helpful content, such as sports scores, weather forecasts, and transcripts.

It’s clear that Google is far more concerned with the output than the means of getting there, doubling down on “to generate content with the primary purpose of manipulating ranking in search results is a violation of our spam policies.”

Combatting SERP manipulation is something Google has many years of experience in, claiming that advances to their systems, such as SpamBrain have made 99% of searches “spam-free”, which would include UGC spam, scraping, cloaking and all various forms of content generation.

Many people have run tests to see how Google reacts to AI content and where they draw the line on quality.

Before the launch of ChatGPT, I created a website of 10,000 pages of content primarily generated by an unsupervised GPT3 model, answering People also ask questions about video games.

With minimal links, the site was quickly indexed and steadily grew, delivering thousands of monthly visitors.

During two Google system updates in 2022, the Helpful Content Update and the later Spam update, Google suddenly and almost completely suppressed the site.

It would be wrong to conclude that “AI content does not work” from such an experiment.

However, this demonstrated to me that at that particular time, Google:

- Was not classifying unsupervised GPT-3 content as “quality.”

- Could detect and remove such results with a raft of other signals.

To get the ultimate answer, you need a better question

Based on Google’s guidelines, what we know about search systems, SEO experiments, and common sense, “Can search engines detect AI content?” is likely the wrong question.

At best, it is a very short-term view to take.

In most topics, LLMs struggle to consistently produce “high-quality” content in terms of factual accuracy and meeting Google’s E-E-A-T criteria, despite having live web access for information beyond their training data.

AI is making significant strides in generating answers for previously content-scarce queries. But as Google aims for loftier long-term goals with SGE, this trend may fade.

The focus is expected to return to longer-form expert content, with Google’s Knowledge systems providing answers to cater to many longtail queries instead of directing users to numerous small sites.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land